Author: MailClickConvert Team

Last Updated: October 29, 2025

Introduction

Sometimes the difference comes down to a few simple changes like the subject line, message tone, or time of day you send it.

That’s where A/B testing makes the difference

A/B testing helps you find out what your audience really responds to. Instead of guessing, you experiment with two versions of your email: Version A and Version B, and track which performs better.

Instead of guessing what works, you test two versions of your email, Version A and Version B, and let the results show which one performs better. It’s a simple, structured way to learn what your audience actually responds to.

This guide walks you through how to run A/B tests for cold emails, interpret your data, and use small, consistent adjustments to drive real improvements.

💡 Related reading: Cold Email Marketing Guide – Everything You Need to Know

What A/B testing means in cold email

A/B testing (or split testing) is a method of comparing two versions of an email to see which one performs better.

Imagine sending 100 emails with two slightly different subject lines.

Half go out as Version A:

“Quick idea to help boost your sales pipeline.”

The other half go out as Version B:

“Can I share one way to increase qualified leads?”

You then check the data. Which subject line had more opens? Which email got more replies? That’s your winner.

You can run A/B tests for many things: tone, length, message structure, or timing. The goal isn’t to guess. It’s to learn, test, and repeat until your outreach consistently performs better.

Think of it as improving your cold email formula, one variable at a time.

Why testing matters

Today’s inboxes are crowded, and filters are smarter than ever. If your message doesn’t immediately connect with your audience, it’s ignored or filtered out.

A/B testing helps you stand out by showing what actually resonates with your audience. Here’s why it matters:

- It replaces assumptions with data. You stop relying on gut feelings and start seeing patterns that prove what works.

- It boosts engagement. Testing subject lines, CTAs, and tone helps increase open and reply rates.

- It improves deliverability. The more people open and engage, the stronger your sender reputation, meaning more emails reach inboxes.

- It builds long-term performance. Each test makes your next campaign more effective.

Top-performing senders rely on testing because it gives them clarity and consistency, not luck.

🔍 Explore more tips: Cold Email Templates That Actually Work for B2B Lead Gen in 2025

What you can test

You don’t need to change everything, start simple.

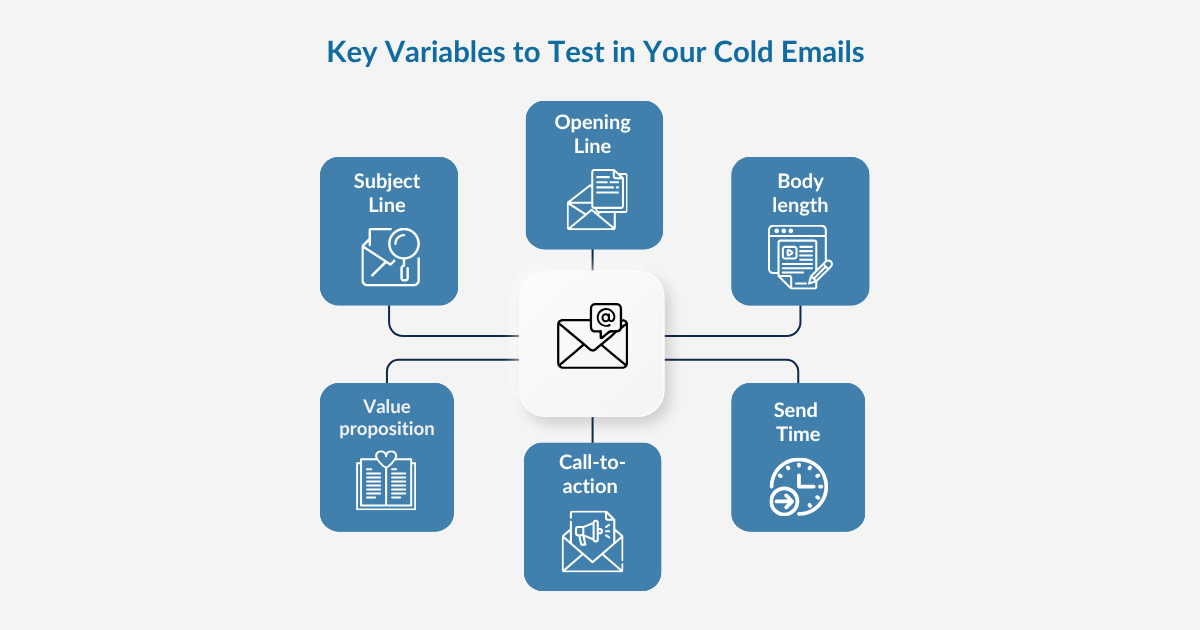

- Subject lines: Try one with a question and one with a statement.

- Opening lines: Compare a personal greeting to a direct approach.

- Body length: Short paragraph vs. slightly longer one.

- Value proposition: Emphasize different benefits, cost savings vs. time savings.

- Call-to-action (CTA): “Schedule a quick chat” vs. “Want to learn more?”

- Send time: Morning vs. afternoon, , Tuesday vs. Thursday.

The key rule: test one thing at a time. If you change both the subject line and the CTA, you won’t know which one actually made the impact.

How to run your first A/B test

Running an A/B test doesn’t need to be complicated.

Pick one variable — for example, your subject line.

Split your list evenly into two groups (A and B).

Send both versions simultaneously to keep results fair.

Track performance using open rates, replies, or clicks.

Once you know which version wins, use it in your next campaign. Then test something new. With every cycle, your results get sharper and more predictable.

💡 Before testing: Make sure your contact list is validated. Read our blog: Can You Send Emails to Purchased Lists?

Example: Subject line test in action

Let’s say you’re reaching out to HR managers. You write two versions of your email:

Version A:

Subject: “Quick question about employee onboarding”

Message: A short, conversational note about improving new hire training.

Version B:

Subject: “How top HR teams simplify onboarding in 2025”

Message: Similar structure, but slightly more formal tone.

After sending to 1,000 contacts (500 each), you notice Version A has a 32% open rate while Version B has 22%. You’ve just learned your audience prefers casual, conversational subject lines.

Next time, you can test something new maybe the CTA or email length building on what you already know.

How to read the results

Once your emails are sent, it’s time to analyze what happened.

- Open rate: Which subject line grabbed attention?

- Reply rate: Which message inspired action?

- Click rate: Which version encouraged engagement (if links were included)?

When reviewing results, always look for clear, repeatable patterns. Maybe short subject lines win consistently, or CTAs phrased as questions get more replies. Those trends become your new playbook.

And remember: reliable data depends on a clean list. If half your emails bounce, your results won’t mean much. That’s why it’s critical to validate your contacts before every test.

Common mistakes to avoid

A/B testing is simple in concept but easy to mismanage. The most common mistakes include:

Testing too many variables at once.

- Drawing conclusions too early.

- Using unverified or outdated lists that skew results.

Keep things clean, focused, and consistent. Change one element, divide your test evenly, and give the data enough time to show a clear pattern.

Read Can You Send Emails to Purchased Lists?

Turning small wins into bigger ones

The best part about A/B testing is how the small wins add up.

Each small win adds up: a stronger subject line improves open rates, a clear CTA boosts replies, and cleaner data improves deliverability. Over time, you’ll have a cold email process that gets reliable results across campaigns.

How MailClickConvert helps

Running A/B tests manually can be time-consuming: dividing lists, tracking results, and trying to make sense of the data. That’s where MailClickConvert comes in.

MCC simplifies testing by giving you everything in one place:

- Automatically split your contact list into two test groups (A and B).

- Send both versions under the same conditions.

- Track open rates, reply rates, and engagement in real time.

- View performance comparisons directly in your dashboard.

- Keep your data accurate with built-in list cleaning and deliverability tools.

- Go beyond A/B testing with A/Z testing — test multiple variations at once (like five or ten subject lines) to see which version truly wins across larger campaigns.

With MCC, you can run smarter tests without juggling spreadsheets or multiple tools. Clean data ensures accurate results, and deliverability protection makes sure both versions actually reach inboxes.

Instead of guessing, you’ll know what works based on reliable numbers.

Final thoughts

Cold email success isn’t random, it’s built through testing, iteration, and data-driven improvement. Every campaign teaches you something new about your audience.

Start simple: test subject lines, CTAs, or tone. Keep your list clean, track your results carefully, and use what works to shape your next campaign.

Over time, these small improvements lead to major results. Your emails get opened more often, your replies increase, and your sender reputation grows stronger.

If you’re ready to start testing with confidence, MailClickConvert gives you everything you need, clean lists, real-time analytics, and effortless tracking all in one place.

Test smarter. Send better. Turn cold emails into real conversations.

- Log in to post comments